Table of Contents

Many SEO people focuses more with on-page and off-page strategies, which largely helps in bringing any sites up the Google SERPs. However, Technical SEO Audit is another field you should remember to implement, so you can avoid minor and major issues hidden on a site.

After all, any problematic systems could fail to run efficiently just because of a single piece missing from the picture, right? And you wouldn’t want to wonder why your site isn’t ranking, when the root cause is only about speed and platform accessibility among others.

What you should take note in doing Technical SEO Audit 2020

Recognizing the importance of site technicalities in doing SEO means you should dig deep into a pool of data and systems, just to make your site seamlessly boost up the ranks. And you need to remember this checklist to be sure of missing nothing through your audit.

Tracing Traffic History

Especially when dealing a client’s website, remember to conduct a traffic history audit to make sure nothing went wrong with the site on the past. Remember that Google is a kind of a freak when talking about mistakes and penalties, which could give a rough road for any sites to rank up.

Remember to implement these steps:

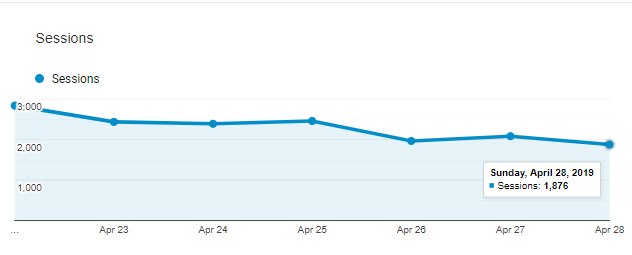

- Have a look at Google Analytics’ traffic data for the past two years, and notice dramatic peaks and falls through the graphs. Those are primarily caused by website changes or Google algorithm changes.

- Ask the client if they have done web redesigning, new section launch, domain migration or have acquired penalties during those traffic fluctuations. If they haven’t done or acquired any big changes during those time, use Moz’s Google algo to know if Google have made significant updates that could have influenced the traffic drop or rise.

- If the site haven’t acquired big changes or haven’t influenced by an update on such time, check its link data. Use Google Search Console to achieve such feat.

- Lastly, investigate the site and see on-page technicalities that could have impacted its traffic, or check updates that have potentially hurt it. If the website was changed and doesn’t look similar at the time of the update, you can use archiving tools to see its appearance back then.

Detecting such crucial past problems on a website could help you address it accordingly, making it clean as you try boosting it up the SERPs.

Set up Google Search Console

Google Search Console is a free yet very valuable tool from Google that you can use for auditing your website. You need to ask for an access from your client, or set it up yourself if it’s still not ready on a client website.

Validating Robots.txt

You can actually stop crawlers from crawling and indexing certain pages on your website, specifically pages that you don’t want for the public to see. You can do it by knowing how to write Robots.txt file, and incorporating it over noindex tag.

For example, this could bar crawlers from crawling over your admin login page, so it won’t float somewhere on the web for hackers to crack. This can also hide irrelevant pages that you want to be avoided by crawlers, so they could focus on your more important pages.

Auditing Meta Robots Tag

You can actually instruct crawlers on how they would treat certain pages on your site, by simply adding Meta Robots Tag on them. Like the noindex tag, for example, it prevents crawlers from indexing certain pages.

Just be careful though, and remember to avoid using Meta Robots Tags on pages you want to show on the SERPs, like blog pages and static pages.

Auditing Canonical Tag

Canonical tags, or rel=canonical, is your way of telling crawlers on which to index among very similar pages. It’s a simple string of code you can inject at the HTML head of your site.

If you have a site that sells similar brand and models of shoes but in different colors, for example, you may need a page for each color. Thing is, those sites probably looks very similar, save for having different color and image.

That could give you the problem of content duplication.

Crawlers will crawl to all of those pages, but would choose which one to consider as a master version to index and rank on the SERPs. However, since all pages will still be crawled, it will hurt your crawling budget which could affect your site’s overall visibility.

Canonical tag could help you point crawlers to a page you want to index, which they will consider as your master version.

Auditing Pagination tag

Some sites choose to chop a lengthy page into a series of pages to prevent overloading a URL. However, crawlers will still tend to crawl each of such pages in a series, which could be a problem when they index all of it.

You only want the first page to be indexed.

To solve it, you should use rel=prev and rel=next on the HTML head of each of your pages. Don’t use rel=canonical as it serves different purpose.

Auditing XML Sitemap

An XML Sitemap could give you an overall view of your site’s URLs, which could help you guide crawlers on which to crawl, index and help rank up. That could help you efficiently make your site visible on the web.

- Make sure that your site has an XML Sitemap, regardless of which platform it’s running. Be sure it has correct format and syntax to have it work smoothly.

- Submit it to Google Search Console so you can clearly see the indexed and non-indexed pages on the website.

- Deal with 404 URLs accordingly, and make sure to remove them unless you have reasons to leave them that way temporarily.

- Be sure that all the site you want to be indexed doesn’t have Robots.txt, Meta Robots Tags, and canonical tags pointing to other pages.

Check your preferred Domain

Your website probably has few versions. One that has www, one that doesn’t have it, an http version, and an https or secured version.

You wouldn’t want such versions to compete with each other on the rank, thus you should choose which version you prefer. You can do it with the use of Google Search Console.

- Begin by searching about “yoursite.com” on Google, so u can see the versions you probably have.

- Create Google Search Console accounts for each of the version.

- Open the accounts one by one, find the gear icon, click settings and click the preferred domain. That’s when you can tell Google which to consider as your real site.

This could help in telling Google which to rank up the SERPs, and to avoid dividing your authority throughout the different versions.

Check URL Structure and Slugs

Make sure the URL slugs of all the pages of your website are readable and understandable by people and crawlers. For example, the slug of www.yoursite.com/2468_bestmsc2020 doesn’t make any sense, as compared to www.yoursite.com/bestmusic2020.

Those are called canonical URL slugs, which is helpful in ranking up a site than simple strings of alphanumeric codes.

You should make sure that all pages on your site has canonical URL slugs, so people and crawlers could easily read and understand it at first glance. Thus, do an audit through the URLs of the site, and transfer all non-canonical to canonical URL slugs.

Audit for Crawl Anomaly

Crawlers sometimes crawl successfully on your pages, only to return and found it’s already inaccessible. That could be caused by 404 error, soft 404, or server errors.

You can spot crawl anomalies using Google Search Console, and check index > coverage. You can complement the resulting URLs with crawling tools to validate the server response codes they show.

A crawling tool can show you false positive results, soft 404 or site errors. False positive results means false crawl anomalies which demands no further actions from you, while soft 404 are actually 200 OK but shows 404 error response.

Lastly, site errors are caused by technical issues, which requires help from web developers to be fixed.

International Targeting or Website Localization

Localization of a website doesn’t simply involve usage of local SEO keywords, but also about international targeting and usage of proper Top Level Domain (TLD). This is to provide search results that are geographically relevant to a user.

For instance, if a person in Brisbane wants to find a nearby steak restaurant, sites of restaurants located in England, Hong Kong and Russia shouldn’t show up. This is where country-specific domains play big role, like .au, .uk, .jp or .sg.

If your site uses neutral TLD like simple .com and .net, Google will simply base your geographic relevance through inbound link you receive. And that’s not a reliable way to localize your site, since you could probably receive links from sites of various places globally.

Thus, you shouldn’t miss to set your target country using Google Search Console, right on its international targeting options.

Auditing Structured Data

Structured data is Google’s capacity to correctly identify different entities on a web page, especially nouns like names, contact numbers, address, brand and events could be marked by it, so Google won’t simply dismiss them as worthless or meaningless stuff.

Thus, check if your site has structured data supporting it, and feel free to do markups using appropriate tools.

Audit your Internal Links

Broken and problematic inbound links could have serious impact to your keyword ranking, since it poses rough flow of crawlers as they scan your site. Moreover, this could affect your users’ experience as well, potentially rendering your site unreliable.

Good links should seamlessly redirect to your intended internal location, and are easy to see for users. These should have anchor texts which are relevant to the target page, and won’t redirect to a different page or to a 404 error.

With the help of a reliable crawling tool, you can audit all of the internal links in your webpage. Make sure every single links have high quality and are active.

Keep an Eye on Follow/Nofollow Attributes

Be sure to have appropriate follow or nofollow attributes to each of your outbound links, so you can control the passage of page rank to a link’s destination.

If you have an external link in your content that directs to an editorial page, for example, use nofollow attribute to avoid passing page rankings to such destination.

Audit on Information Architecture

Information architecture (IA) is the overall technical, design and functional framework of shared information platforms, which includes websites. Although this seems to have no meaning on SEO, this actually affects the flow of crawlers on a given website, which could affect indexing as well.

And there are key factors you should consider in auditing a site’s IA:

Use of Subdomains is NOW discouraged

Subdomains are smaller domains under a main Domain Name System (DNS), which acts as separate web entities from the main domain. For example, you have a site that sells automobile, you can create subdomains under the main domain of the site to sell specific brands of cars.

However, the use of subdomain could actually hurt the main domain in terms of SEO. The biggest reason being crawlers treat each subdomains as separate websites, each even requiring you to use different Google Search Console and Analytics accounts.

Fancy Search Filters could hurt a site’s SEO too

Some website make user navigation much easier through the use of search filters, like what you can find on ecommerce sites and online dating platforms. For example, users can simply enter a keyword, then tick some checkboxes to show specified results.

Although that’s undeniably great for users, it’s totally a different story for web crawlers.

That’s because of the unique URLs every single search could produce, since each user could filter their search the way they want or need. That results to thousands of unindexed pages on the website, generate huge bulk of crawl errors upon URL expiration, and have bad flow of link equity to internal pages aside from the home page.

Optimize the use of Cascading Navigational links

Cascading navigational link could help crawlers seamlessly flow throughout your site, giving sufficient equity to all pages. And that could help regardless of how big your site is.

For example, you can begin by proper internal linking of pages from your home pages. On lower levels of internal links, you can direct them from subcategories so crawlers could simply pass through.

Auditing your Redirects

Make sure all redirects on your website are working seamlessly and doesn’t lead to dead pages. After all, the main purpose of redirects is to guide users away from deleted pages, to another page which is relevant for them.

With the help of a crawling tool, you need to make sure that your redirects are:

- Not pointing to another redirect and form a chain. In such cases, error 3XX usually show. You need to update the flow, and simply point your first redirect to the end destination.

- Make sure there’s no 4XX or 5XX errors, which means your redirects points to a dead page. When you spot such error, update the redirect to an appropriate destination, or simply turn it off.

- 2XX OK response should come from the destination link, and not from the source link. Having it come from the latter, means your redirect isn’t working the way it should be.

Setup and Audit SSL

Google have said that secure URLs could contribute a lot in SEO points, but it currently holds minor SEO advantage. Thing is, it could severely hurt your site when you do it wrong.

Thus, only incorporate SSL when you have enough time and skills to seamlessly implement it. Moreover, remember these factors along the way:

Proper Redirects from HTTP to HTTPS

All pages from your old HTTP website should be redirected to their respective HTTPS counterpart on 1:1 ratio. Make sure all URLs makes “301 Moved Permanently” response.

That could tell you that all HTTP pages are properly redirected or migrated to their HTTPS counterparts.

Clerical SSL Mistakes

Be sure to avoid these SSL mistakes your site could potentially acquire during the acquisition of an SSL certificate. This could hurt your SEO as well.

- Browsers deem SSL of particular websites as unreliable.

- Missing Intermediate SSL certificate.

- Self-fabricated SSL certificate is used by a website.

- Mismatch error of SSL certificate name

This makes it important to find a reliable expert with sufficient knowledge, skills and equipment to do a perfect transition or migration to HTTPS.

Site Speed Testing

Your site’s load speed could great influence its ranking factor, since this affects the length of time visitors stay. Thus, it’s important to balance the overall design of a website with stellar speed, making it necessary to consider these factors:

- A site shouldn’t have unnecessary long codes that could pull down its speed. You can hire a web developer to check a site, and see if each lines of HTML, CSS and Java Scripts are necessary for it.

- Content Distribution Network (CDN) could be necessary on large websites. This is distributing tasks to a network of different proxy servers, which in turn works hand in hand to deliver huge bulk of contents to the website. That results to better speed and stability for a website.

- Be sure all hosting servers have high quality and speed.

- Optimize images so they won’t severely hurt the speed of the website. For example, minimize their size and dimension, or use CDN to make images load faster.

Mobile Accessibility Test

Mobile devices are now the primary way for people to access the internet, thus it’s important for websites to be friendly for them. If you’re site isn’t accessible enough for mobile devices, you should consider redesigning it.

To know about your site’s mobile accessibility, you can use Google Mobile Friendliness tool, and simply input your site’s URL. It will instantly show you the result you need.

Mobile Crawlability

Google is now changing its focus from desktop to mobile, simply because mobile devices is now the main access of people to the internet. Thus, be sure that the mobile versions of your sites are optimized as well, making it important to focus on these factors:

- Mobile load speed

- Load prioritization should focus on the top of the page first, so users could easily crawl down.

- Any sites should be generally easy to use on a mobile device, aside from being simply readable on smaller screens.

Conclusion

To sum it up, all you need to remember is website availability, performance, crawlability, code cleanliness and proper use of server resources on technical SEO audit. That could help you boost your site up the ranks, especially when you use it efficiently with on-page and off-page SEO techniques.

You may also want to read:

2020 SEO Strategies to rank on Google with no SEO Linking

Local SEO: Get More People come in your Actual Location

Knowing Off-Page SEO and Ensuring Quality Links to Your Site

PPC Campaign Management helps online businesses

Campaign Management is a service to plan and execute its PPC ads’ spending and strategy to reach the KPI. The main goal is to get leads, sales, or as simple as visitors to the website. Revenue to the website is

Kick-Start Your SEO Career with Superb SEO Training Davao Courses

Enroll in the best SEO training Davao course now!

Remember that search engine optimization is one of the biggest gold mines for online professionals today. Why? Because SEO is one of the top strategies that help small to large enterprises earn big. Meaning, if you know the expert ins and outs of SEO, companies will pay you well.

Remarkable SEO Agency Philippines to help with your business website

Hire Jeanius Hub for professional SEO Agency Philippines services! In this article let’s talk about the benefits of SEO Agency Philippines Services for your business. Search engine optimization (SEO) is a vital part of any digital marketing campaign today. It